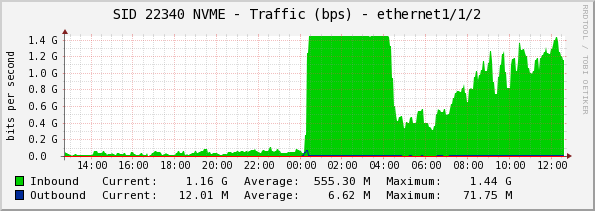

NVME와 삼성 850 PRO 256G x 4 - RAID 10 구성이랑 NVME 2개가 한 서버에 세팅되있고, 둘다 ext4 상황에서

데이타 전송을 건 상황이고 한계 속도에 도달하고 속도 변화가 없습니다,

윈도우로 테스트 할때 속도 speedtest.net 속도 테스트 했을때는 8Gbps 정도는 나왔었는데...T.T

답답하네요.

SNMP 64비트 카운트로 카운트 중이구요.

SNMP 64비트 카운트로 카운트 중이구요.

백본 100G에서 10G 4개로 ICX-7750-48F 10/40G 포트에 4개 연결구성

Brocade icx-7750-48F (48포트 10Gbps)에서 서버로 Intel 10G SFP+ DAC 3M 연결중 입니다.

01:00.0 Ethernet controller: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection (rev 01)

LnkCap: Port #0, Speed 5GT/s, Width x8, ASPM L0s, Latency L0 unlimited, L1 <8us

ClockPM- Surprise- LLActRep- BwNot-

LnkCtl: ASPM Disabled; RCB 64 bytes Disabled- Retrain- CommClk+

ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt-

ClockPM- Surprise- LLActRep- BwNot-

LnkCtl: ASPM Disabled; RCB 64 bytes Disabled- Retrain- CommClk+

ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt-

LnkSta: Speed 5GT/s, Width x8, TrErr- Train- SlotClk+ DLActive- BWMgmt- ABWMgmt-

ixgbe 0000:01:00.0: PCI INT A -> GSI 26 (level, low) -> IRQ 26

ixgbe 0000:01:00.0: setting latency timer to 64

ixgbe: 0000:01:00.0: ixgbe_check_options: FCoE Offload feature enabled

ixgbe 0000:01:00.0: irq 93 for MSI/MSI-X

ixgbe 0000:01:00.0: irq 94 for MSI/MSI-X

ixgbe 0000:01:00.0: irq 95 for MSI/MSI-X

ixgbe 0000:01:00.0: irq 96 for MSI/MSI-X

ixgbe 0000:01:00.0: irq 97 for MSI/MSI-X

ixgbe 0000:01:00.0: irq 98 for MSI/MSI-X

ixgbe 0000:01:00.0: irq 99 for MSI/MSI-X

ixgbe 0000:01:00.0: irq 100 for MSI/MSI-X

ixgbe 0000:01:00.0: irq 101 for MSI/MSI-X

ixgbe 0000:01:00.0: irq 102 for MSI/MSI-X

ixgbe 0000:01:00.0: irq 103 for MSI/MSI-X

ixgbe 0000:01:00.0: irq 104 for MSI/MSI-X

ixgbe 0000:01:00.0: irq 105 for MSI/MSI-X

ixgbe 0000:01:00.0: PCI Express bandwidth of 32GT/s available

ixgbe 0000:01:00.0: (Speed:5.0GT/s, Width: x8, Encoding Loss:20%)

ixgbe 0000:01:00.0: eth0: MAC: 2, PHY: 12, SFP+: 3, PBA No: 010AFF-0FF

ixgbe 0000:01:00.0: 0c:c4:7a:3a:2f:b2

ixgbe 0000:01:00.0: eth0: Enabled Features: RxQ: 12 TxQ: 12 FdirHash RSC

ixgbe 0000:01:00.0: eth0: Intel(R) 10 Gigabit Network Connection

ixgbe 0000:01:00.1: PCI INT B -> GSI 28 (level, low) -> IRQ 28

ixgbe 0000:01:00.1: setting latency timer to 64

ixgbe: 0000:01:00.1: ixgbe_check_options: FCoE Offload feature enabled

ixgbe 0000:01:00.1: irq 106 for MSI/MSI-X

ixgbe 0000:01:00.1: irq 107 for MSI/MSI-X

ixgbe 0000:01:00.1: irq 108 for MSI/MSI-X

ixgbe 0000:01:00.1: irq 109 for MSI/MSI-X

ixgbe 0000:01:00.1: irq 110 for MSI/MSI-X

ixgbe 0000:01:00.1: irq 111 for MSI/MSI-X

ixgbe 0000:01:00.1: irq 112 for MSI/MSI-X

ixgbe 0000:01:00.1: irq 113 for MSI/MSI-X

ixgbe 0000:01:00.1: irq 114 for MSI/MSI-X

ixgbe 0000:01:00.1: irq 115 for MSI/MSI-X

ixgbe 0000:01:00.1: irq 116 for MSI/MSI-X

ixgbe 0000:01:00.1: irq 117 for MSI/MSI-X

ixgbe 0000:01:00.1: irq 118 for MSI/MSI-X

ixgbe 0000:01:00.1: PCI Express bandwidth of 32GT/s available

ixgbe 0000:01:00.1: (Speed:5.0GT/s, Width: x8, Encoding Loss:20%)

ixgbe 0000:01:00.1: eth1: MAC: 2, PHY: 1, PBA No: 010AFF-0FF

ixgbe 0000:01:00.1: 0c:c4:7a:3a:2f:b3

ixgbe 0000:01:00.1: eth1: Enabled Features: RxQ: 12 TxQ: 12 FdirHash RSC

ixgbe 0000:01:00.1: eth1: Intel(R) 10 Gigabit Network Connection

ixgbe 0000:01:00.0: registered PHC device on eth0

ixgbe 0000:01:00.0: eth0: detected SFP+: 3

ixgbe 0000:01:00.0: eth0: NIC Link is Up 10 Gbps, Flow Control: RX/TX

ixgbe 0000:01:00.0: eth0: NIC Link is Down

ixgbe 0000:01:00.0: eth0: NIC Link is Up 10 Gbps, Flow Control: RX/TX

ixgbe 0000:01:00.0: eth0: detected SFP+: 3

ixgbe 0000:01:00.0: eth0: NIC Link is Up 10 Gbps, Flow Control: RX/TX

ixgbe 0000:01:00.0: eth0: NIC Link is Down

ixgbe 0000:01:00.0: eth0: NIC Link is Up 10 Gbps, Flow Control: RX/TX

ixgbe 0000:01:00.0: eth0: changing MTU from 1500 to 9000

ixgbe 0000:01:00.0: eth0: detected SFP+: 3

ixgbe 0000:01:00.0: eth0: NIC Link is Up 10 Gbps, Flow Control: RX/TX

ixgbe 0000:01:00.0: eth0: NIC Link is Down

ixgbe 0000:01:00.0: eth0: NIC Link is Up 10 Gbps, Flow Control: RX/TX

ixgbe 0000:01:00.0: eth0: changing MTU from 9000 to 1500

ixgbe 0000:01:00.0: eth0: detected SFP+: 3

ixgbe 0000:01:00.0: eth0: NIC Link is Up 10 Gbps, Flow Control: RX/TX

ixgbe 0000:01:00.0: eth0: NIC Link is Down

ixgbe 0000:01:00.0: eth0: initiating reset due to lost link with pending Tx work

ixgbe 0000:01:00.0: eth0: Reset adapter

ixgbe 0000:01:00.0: eth0: detected SFP+: 3

ixgbe 0000:01:00.0: eth0: NIC Link is Up 10 Gbps, Flow Control: RX/TX

ixgbe 0000:01:00.0: eth0: NIC Link is Down

ixgbe 0000:01:00.0: eth0: NIC Link is Up 10 Gbps, Flow Control: RX/TX

ixgbe 0000:01:00.0: PCI INT A -> GSI 26 (level, low) -> IRQ 26

ixgbe 0000:01:00.0: setting latency timer to 64

ixgbe: 0000:01:00.0: ixgbe_check_options: FCoE Offload feature enabled

ixgbe 0000:01:00.0: irq 93 for MSI/MSI-X

ixgbe 0000:01:00.0: irq 94 for MSI/MSI-X

ixgbe 0000:01:00.0: irq 95 for MSI/MSI-X

ixgbe 0000:01:00.0: irq 96 for MSI/MSI-X

ixgbe 0000:01:00.0: irq 97 for MSI/MSI-X

ixgbe 0000:01:00.0: irq 98 for MSI/MSI-X

ixgbe 0000:01:00.0: irq 99 for MSI/MSI-X

ixgbe 0000:01:00.0: irq 100 for MSI/MSI-X

ixgbe 0000:01:00.0: irq 101 for MSI/MSI-X

ixgbe 0000:01:00.0: irq 102 for MSI/MSI-X

ixgbe 0000:01:00.0: irq 103 for MSI/MSI-X

ixgbe 0000:01:00.0: irq 104 for MSI/MSI-X

ixgbe 0000:01:00.0: irq 105 for MSI/MSI-X

ixgbe 0000:01:00.0: PCI Express bandwidth of 32GT/s available

ixgbe 0000:01:00.0: (Speed:5.0GT/s, Width: x8, Encoding Loss:20%)

ixgbe 0000:01:00.0: eth0: MAC: 2, PHY: 12, SFP+: 3, PBA No: 010AFF-0FF

ixgbe 0000:01:00.0: 0c:c4:7a:3a:2f:b2

ixgbe 0000:01:00.0: eth0: Enabled Features: RxQ: 12 TxQ: 12 FdirHash RSC

ixgbe 0000:01:00.0: eth0: Intel(R) 10 Gigabit Network Connection

ixgbe 0000:01:00.1: PCI INT B -> GSI 28 (level, low) -> IRQ 28

ixgbe 0000:01:00.1: setting latency timer to 64

ixgbe: 0000:01:00.1: ixgbe_check_options: FCoE Offload feature enabled

ixgbe 0000:01:00.1: irq 106 for MSI/MSI-X

ixgbe 0000:01:00.1: irq 107 for MSI/MSI-X

ixgbe 0000:01:00.1: irq 108 for MSI/MSI-X

ixgbe 0000:01:00.1: irq 109 for MSI/MSI-X

ixgbe 0000:01:00.1: irq 110 for MSI/MSI-X

ixgbe 0000:01:00.1: irq 111 for MSI/MSI-X

ixgbe 0000:01:00.1: irq 112 for MSI/MSI-X

ixgbe 0000:01:00.1: irq 113 for MSI/MSI-X

ixgbe 0000:01:00.1: irq 114 for MSI/MSI-X

ixgbe 0000:01:00.1: irq 115 for MSI/MSI-X

ixgbe 0000:01:00.1: irq 116 for MSI/MSI-X

ixgbe 0000:01:00.1: irq 117 for MSI/MSI-X

ixgbe 0000:01:00.1: irq 118 for MSI/MSI-X

ixgbe 0000:01:00.1: PCI Express bandwidth of 32GT/s available

ixgbe 0000:01:00.1: (Speed:5.0GT/s, Width: x8, Encoding Loss:20%)

ixgbe 0000:01:00.1: eth1: MAC: 2, PHY: 1, PBA No: 010AFF-0FF

ixgbe 0000:01:00.1: 0c:c4:7a:3a:2f:b3

ixgbe 0000:01:00.1: eth1: Enabled Features: RxQ: 12 TxQ: 12 FdirHash RSC

ixgbe 0000:01:00.1: eth1: Intel(R) 10 Gigabit Network Connection

ixgbe 0000:01:00.0: registered PHC device on eth0

ixgbe 0000:01:00.0: eth0: detected SFP+: 3

ixgbe 0000:01:00.0: eth0: NIC Link is Up 10 Gbps, Flow Control: RX/TX

ixgbe 0000:01:00.0: eth0: NIC Link is Down

ixgbe 0000:01:00.0: eth0: NIC Link is Up 10 Gbps, Flow Control: RX/TX

ixgbe 0000:01:00.0: eth0: detected SFP+: 3

ixgbe 0000:01:00.0: eth0: NIC Link is Up 10 Gbps, Flow Control: RX/TX

ixgbe 0000:01:00.0: eth0: NIC Link is Down

ixgbe 0000:01:00.0: eth0: NIC Link is Up 10 Gbps, Flow Control: RX/TX

ixgbe 0000:01:00.0: eth0: changing MTU from 1500 to 9000

ixgbe 0000:01:00.0: eth0: detected SFP+: 3

ixgbe 0000:01:00.0: eth0: NIC Link is Up 10 Gbps, Flow Control: RX/TX

ixgbe 0000:01:00.0: eth0: NIC Link is Down

ixgbe 0000:01:00.0: eth0: NIC Link is Up 10 Gbps, Flow Control: RX/TX

ixgbe 0000:01:00.0: eth0: changing MTU from 9000 to 1500

ixgbe 0000:01:00.0: eth0: detected SFP+: 3

ixgbe 0000:01:00.0: eth0: NIC Link is Up 10 Gbps, Flow Control: RX/TX

ixgbe 0000:01:00.0: eth0: NIC Link is Down

ixgbe 0000:01:00.0: eth0: initiating reset due to lost link with pending Tx work

ixgbe 0000:01:00.0: eth0: Reset adapter

ixgbe 0000:01:00.0: eth0: detected SFP+: 3

ixgbe 0000:01:00.0: eth0: NIC Link is Up 10 Gbps, Flow Control: RX/TX

ixgbe 0000:01:00.0: eth0: NIC Link is Down

ixgbe 0000:01:00.0: eth0: NIC Link is Up 10 Gbps, Flow Control: RX/TX

CPU E5-2609V3 x2

RAM 32G

Super Micro 1028U-TNRTP+

CentOS 6.6 X86_64

Super Micro 1028U-TNRTP+

CentOS 6.6 X86_64

내장 장치를 이용하다 보니 NVME / 10G SFP+ 2개 모두 CPU 1번에 걸려있는 상태입니다.

지난주에 최대 속도가 1.2Gbps 더 이상 않올라 가더니... 오늘은 1.44G에서 더 이상 않올라 가네요.

네트워크 드라이버는 최신 드라이버로 설치 했는데, 설마 CPU 랑 PCIE 대역 때문이려나요.

사랑과 관심을 부탁드립니다.

디스크 로드는 RAID쪽 볼륨 테스트시에 2까지 가고,

NVME일때는 0.02 상태 유지합니다. ㅡ.ㅡㅋ

그리고 의외로 DAC경우 인식은 되지만 호환성문제가 있습니다.

그리고 윈도우 설치 했을때 속도 테스트 사이트 speedtest.net에서 8.8Gbps 업로드 뜨는거 확인하고 리눅스로 갈아 타고 서비스 올렸는데 이런 상황입니다 ㅜ.ㅜ